|

CMAssist : Human/Robot Interaction |

|

About |

||

We are interested in understanding how robots can best work around,

interact with, and assist people in natural human environments.

Our specific research goals include:

|

||

News |

||

| July 20, 2006: | CMAssist received a technical innovation award at the 2007 AAAI Mobile Robot Competition and Exhibition. The award was for Robust Hardware and Software Design. | |

| June 18, 2006: | CMAssist placed 2nd in the finals at the first ever RoboCup @ Home competition. Five teams made it to the finals from the technical round. We ranked 1st place overall in the technical round out of eleven teams participating in total. | |

People | ||

Current Contributors

Past Contributors

|

||

Collaborators and Sponsors | ||

Publications | ||

|

||

Robots | ||

ConstructionOur platform was based originally on an Evolution ER1 mobile robot. We replaced the stepper motors with DC motors and our own motor controllers. We also replaced all of the plastic connecting pieces from the original ER1 kit with aluminum parts from 80/20. Additional x-bar aluminum for the robot's skeletons as purchased through 80/20. |

||

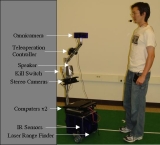

SensorsMounted on top of the robot is a custom-built high-resolution omni-directional vision system called CAMEO that consists of 4 separate firewire cameras in a daisy-chain configuration. The CAMEO is used for person detection and tracking as well as for object recognition and robot localization.Lower down on the sensor mast is a stereo camera rig from Videre Design. The stereo camera is used for obstacle detection and person tracking. Around the base of the robot are close-range IR proximity sensors from Acroname. These are used solely for obstacle detection. One of our robots has a Hokuyo URG-04LX laser that is used for very accurate localization and map construction. The sensor layout of the robot is shown below:

|

||

ComputationComputation is provided by two laptops mounted on top of the robot's base. Additional computation can be provided from external computers connecting to the robot over wireless Ethernet.

|

||

Speech Recognition and SynthesisSpeech recognition is accomplished through the use of IBM ViaVoice and the Naval Research Lab's NAUTILUS natural language processing system.A text-to-speech system called Cepstral gives our robots the ability to speak back to the humans they are operating with.

|

||

SoftwareDue to the heavy computational demands of speech and vision processing, the software architecture operates in a distributed fashion where sensors and actuators run as separate processes (potentially on different computers). These processes essentially are like network ``services'' which accept TCP/IP connections from clients that need to communicate with them. Behaviors, like the sensors and actuators, also run as a separate process. In order to provide a single consistent interface to the myriad of sensor and actuator services, a single abstraction, called the Featureset, is used. The Featureset is a multi-threaded container module that provides a single unified interface to all sensors and actuators for any behavior that may need to use them. As new hardware services are added to the robot, the Featureset is expanded with new interfaces.A block diagram of our software architecture is shown below:

|

||

Photos | ||

CMAssist'06 -- 2nd Place Finalists in RobotCup'06

Here are our robots: Carmela (in red) and Erwin (in blue). This is the configuration of the robots after they returned from RoboCup 2006. Modifications made on-site included mounting the robot's speakers as well as providing a permanent place for the joysticks. We placed 2nd in the finals and 1st in the preliminary technical round in the 2006 RoboCup@Home league.

|

||

Robot Design Before Leaving for Robocup'06 Carmela before we packed her up to ship to RoboCup 2006 in Bremen, Germany. Erwin traveled there as well but was not used directly for the competition but rather served as a backup in case of equipment failure on Carmela.

|

||

Robot Design Revision 2

Here is the robot prototype from early May 2006. We enlarged the bottom area to house more electronics and added the emergency kill switch.

|

||

Robot Design Original

Here is the original robot design as developed in January 2006.

|

||