Research Interests

My research interests focused on problems regarding how robots can operate robustly in human environments (indoors, roadways, industrial, etc...) and in particular how they can interact effectively with the people that inhabit those spaces. This page describes my work at The Robotics Institute at Carnegie Mellon University as well as my graduate research before that.

Inferring Adversarial Intent with Automated Exploratory Behaviors 2010-2012

Autonomous robots that encounter other autonomous agents, be they other robots or people, need to infer the activity/intent of those agents to determine what sorts of behaviors should be invoked. In this project, the term "adversarial" refers to any intent that is not automatically assumed to be explicitly and deliberatively cooperative. We are interested in extending the work on passive activity recognition, whereby the intent/activity of the observed agent is inferred through analysis of the observation history, by having the autonomous robot explicitly execute actions/behaviors that will affect the behavior of the observed agent.

To learn more, visit the official project home page(hosted at archive.org).Intelligent Infrastructure for Automated People Movers 2010-2012

We are exploring the science and engineering questions of how to make public transportation systems more intelligent so as to improve their safety, efficiency, and reduce the overall cost of the support infrastructure. We are evaluating the use of LIDAR-based measurement systems, inertial measurement systems, GPS, and other low-cost infrastructural sensors to provide cost-effective solutions to improve safety, reliability, and efficiency.

To learn more, visit the official project home page(hosted at archive.org).Intelligent Monitoring of Assembly Operations 2009-2012

Our research involves the use of sensor fusion and activity recognition to optimize the efficiency of industrial workcells. Our goal is to allow people and intelligent and dexterous machines to work together safely as partners in assembly operations performed within these workcells. To ensure the safety of people working amidst active robotic devices, we use vision and 3D sensing technologies, such as stereo cameras and flash LIDAR, to detect and track people and other moving objects within the workcell.

Autonomous Driving Collaborative Laboratory (ADCRL) 2008-2012

General Motors and Carnegie Mellon University created a new Collaborative Research Laboratory (CRL) which focuses on technologies for Autonomous Driving (AD) research. This new lab, called the ADCRL, has four core research areas including: architectures, behaviors, perception, and planning. I am interested in methods of fusing sensor data from automotive sensors such as cameras and radar with context from the surrounding environment to allow an autonomous vehicle to more robustly and reliably detect the presence of people and bicycles in its vicinity.

Snackbot 2008-2012

The Snackbot is a social delivery robot designed to navigate between offices at CMU and deliver food to subscribers of its services. The Snackbot project seeks to understand how robots can effectively interact with humans and operate alongside people in indoor environments. This effort focusing on developing methods for robots to perceive humans, understand their activities, and observe how they interact with their environment.

Tartan Racing and the DARPA Urban Challenge 2007

I joined Tartan Racing as the perception lead of CMU's effort to win the 2007 DARPA Urban Challenge. The Urban Challenge was a contest to promote the research and development of autonomous cars that operate safely in on-road (urban) settings. I was actively involved with the development of the robot's moving obstacle fuser, a multi-target/multi-hypothesis probabilistic state estimation system (using Kalman Filters) which took inputs from the vehicle's wide range of sensor modalities (multiple lasers and radars) to produce a single consistent set of tracked objects, and the development of the robot's road fusion module which estimated the shape of the road in front of the robot using six different laser systems that identified geometric road features such as curbs and obstacles. Tartan Racing took 1st place in the 2007 DARPA Urban Challenge.

CMAssist 2006-2008

My students and I built a team of robots to compete in a new RoboCup league called RoboCup@Home. The @Home league is a departure from the more traditional RoboCup domains which focus their research in a multi-agent Soccer domain. Instead, RoboCup@Home focuses on socially-relevant human/robot interaction in a home environment. Our robots, named Erwin and Carmela, were capable of visually tracking and following people, navigating safely through an environment, under- standing spoken commands, and learning simple tasks by observing and interacting with people. We placed 2nd in the first ever competition in Breman, Germany, 2006. Another focus of the CMAssist effort is to find ways for humans to impart procedural task knowledge to robots using natural communications mechanisms such as speech and physical demonstration.

CAMEO (CALO-PA) 2003-2006

The Camera Assisted Meeting Event Observer (CAMEO), is an omnidirectional sensory system designed to provide an intelligent computer agent with physical awareness of the real world. As a stationary camera, CAMEO has the advantages of only needing to model and track objects around it rather than having to estimate its own motion as well. However, very few assumptions can be made about the exact structure of the environment, or CAMEO's absolute position within it. I have developed an action recognition algorithm which uses the tracked relative motions of human faces as input. This technique is now being used to learn specific objects in the environment based on people's activities. Through this functional object recognition algorithm, we are able to model objects associated with the tracked activities, e.g. chairs by identifying the objects where people sit. The CAMEO project was part of a much larger effort called CALO (Cognitive Agent that Learns and Organizes).

AIBO Soccer 2003-2005

We are investigating the complexities of dynamic real-time adversarial environments with an AIBO RoboCup soccer team. In the RoboCup legged league, teams of four AIBOs compete against one another in two ten-minute halves. Because the AIBOs are quadrupedal robots, their motions are extremely difficult to model correctly, particularly when they are being jostled by other players. The low viewing angle and narrow field of view of their cameras make it difficult to get an accurate world view of all objects around them. I am primarily interested in addressing the problems of using communication to create an accurate shared world model in the face of very noisy sensors and actuators. An accurate shared world model is extremely important for effective teamwork as the robots can model the effects of their teammate's actions and plan accordingly.

I co-instructed a course being taught with the Sony AIBO. The archived course materials can be downloaded here.

Segway Soccer 2003-2004

The SegwayRMP project investigates the coordination of dynamically formed, mixed human-robot teams within the realm of a team task that requires real-time decision making and response. We are working towards the realization of Segway Soccer, which is a game of soccer between two teams consisting of Segway riding humans and Segway RMP-based robots. The SegwayRMP's sensors consist of a servo-mounted color camera and has a pneumatic kicker with an actuated ball manipulation device. I have developed an Extended Kalman Filter SLAM implementation for the Segway which allows it to stay within the boundaries of the field as well was helping to develop the initial software infrastructure for the Segway's perceptual systems. I am interested in extending my work in human activity recognition into this domain to allow humans and robots to cooperatively play soccer.

PhD Dissertation 2003

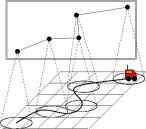

My dissertation, entitled "Building Topological Maps with Minimalistic Sensor Models", addresses the problem of simultaneous localization and mapping for miniature robots that have extremely poor odometry and sensing capabilities. Existing robotic mapping algorithms generally assume that the robots have good odometric estimates and have sensors that can return the range or bearing to landmarks in the environment. This work focuses on solutions to this problem for robots where the above assumptions do not hold.

A novel method is presented for a sensor poor mobile robot to create a topological estimate of its path through an environment by using the notion of a virtual sensor that equates ``place signatures'' with physical locations in space. The method is applicable in the presence of extremely poor odometry and does not require sensors that return spatial (range or bearing) information about the environment. Without sensor updates, the robot's path estimate will degrade due to the odometric errors in its position estimates. When the robot re-visits a location, the geometry of the map can be constrained such that it corrects for the odometric error and better matches the true path.

Several maximum likelihood estimators are derived using this virtual sensor methodology. The first estimator uses a physics-inspired mass and spring model to represent the uncertainties in the robot's position and motion. Errors are corrected by relaxing the spring model through numerical simulation to the state of least potential energy. The second method finds the maximum likelihood solution by linearizing a Chi-squared error function. This method has the advantage of explicitly dealing with dependencies between the robot's linear and rotational errors. Finally, the third method employs the iterated form of the Extended Kalman Filter. This method has the advantage of providing a real-time update of the robot's position where the others process all the data at once.

Finally, a method is presented for dealing with multiple locations that cannot be disambiguated because their signatures appear to be identical. In order to decide which sensor readings are associated with what positions in space, the robot's sensor readings and motion history are used to calculate a discrete probability distribution over all possible robot positions.

Graduate Research at the University of Minnesota 1995-2003

I worked on a DARPA program that focused on the development of distributed robotic systems. Our team at the University of Minnesota developed a team of very small robots (approximately the size of a can of soda) called the scout. This robot could be remotely teleoperated, could transmit video back to the operator, and was capable of jumping using an actuated leaf spring. The need to for these robots to autonomously navigate their environment and construct a spatially consistent map was the foundation for my PhD thesis work. My masters degree focused on a software architecture which could control a team of these scout robots as a single distributed sensor network.

Links to the labs where I did my graduate work can be found below: